Building High-Performance Computing Clusters¶

To get more information regarding what a Scientific High-Performance Computing Cluster is and what it is used for, see the following video:

Video

In this tutorial, we will build an HPC cluster using virtual infrastructure.

Danger

Note that the virtual machines may be refreshed (reinstalled) occasionally. Do not store necessary files/scripts on the hn, wn01, or wn02 virtual machines. Create/modify your scripts on the jump node instead.

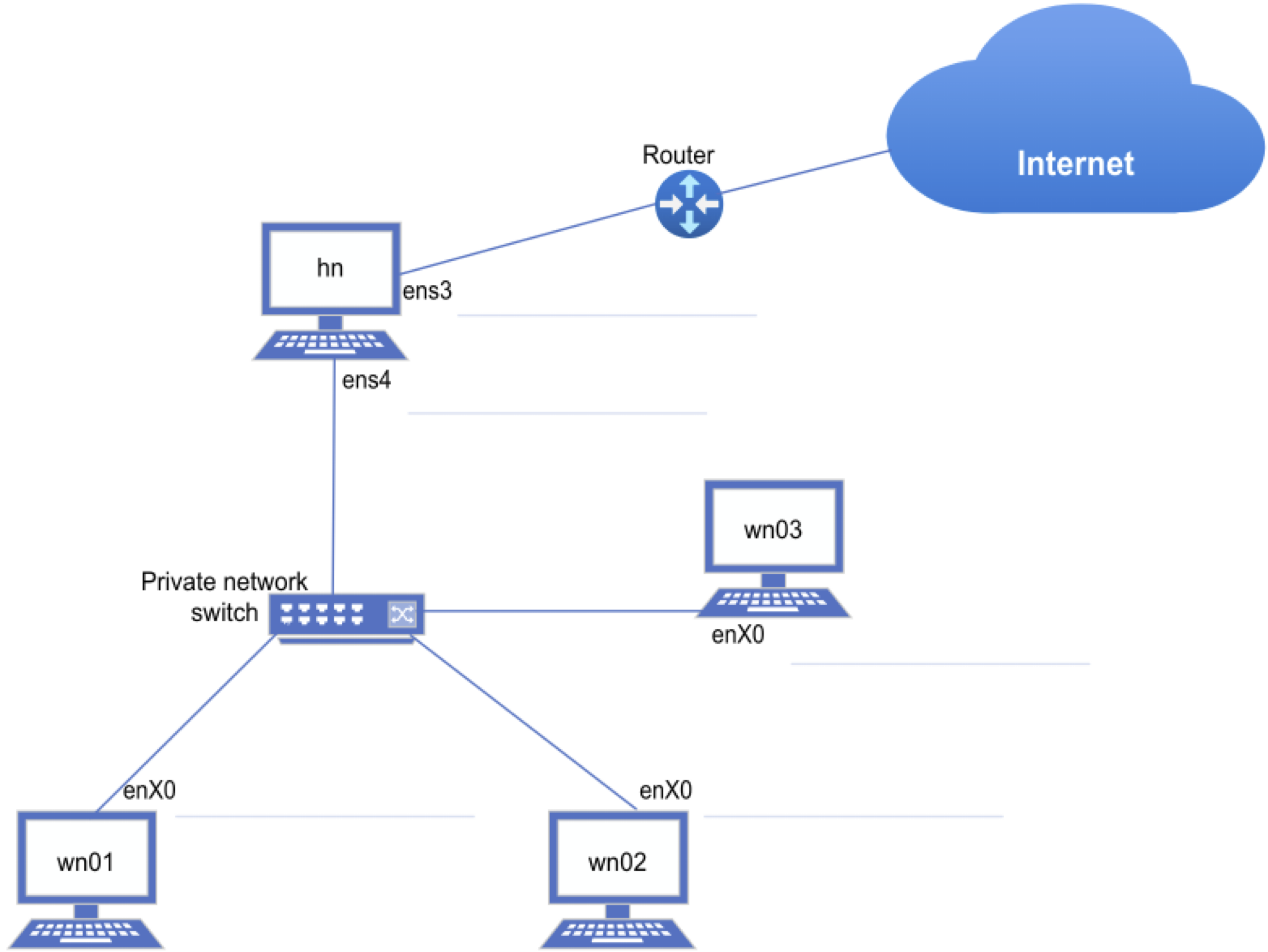

We will install a three-node cluster with the following basic topology:

Planning¶

A crucial part of installing an HPC is ensuring the configuration is known before starting.

The essential parts of the design are: Choosing the correct hardware Designing a sensible yet secure topology Outlining the networking (e.g. IP Addressing) Selecting the correct operating system Selecting the correct resource management system

All the above should be done before beginning your installation. For this tutorial, the design and outlines were created on behalf of students. However, some hardware device names and IP addresses may differ from those documented here.

Note

If your configuration differs from what is discussed here, use the technical document to capture the differences.

Physical installation¶

This tutorial is based on virtual infrastructure. Each student’s private network is isolated from the other students using VLANS. Should you want to install the cluster on physical hardware, this guide can still be used, and whenever mention is made of cloud infrastructure, the student simply has to use the physical machine referred to.

The following sections will briefly explain some of the required actions to install a scientific high-performance computing cluster.

Technical Configuration¶

This document will use references to various hostnames, device names, and IP Addresses. These references reflect the installation and configuration in a virtualised environment. Changing the values to reflect your configuration and environment would be best.

Note

On the Head Node, one often has multiple hard drive disks. Optionally, the drives can also be configured in a Redundant Array of Independent Disks (RAID) configuration to provide redundancy or a higher throughput to the storage. It is recommended that the head node has one (or more in RAID) Solid State (SSD), Serial Attached Small Computer System Interface (SCSI), or Non-volatile Memory Express (NVMe) hard drives for primary storage and boot drive.

Note

Ensure only the intended hard drive is selected when assigning storage in the installation wizard.

Global Configuration

Please use the following table to reflect the values for your implementation. Default values are prefilled.

General Information¶

| Description | Value |

|---|---|

| User Number | |

| Domain Name | |

| Domain Name Servers | |

| Head Node hostname | |

| Worker Node hostname prefix | |

| Number of Worker Nodes | |

| Username used during installation | |

| Group name of software owners |

Networking¶

| Description | Value |

|---|---|

| Private Network | 10.0.0.0/255.255.255.0 |

| Head Node Private IP | 10.0.0.100 |

| Head Node Internet (Public) IP | 172.21.0.199 |

Filesystem Information¶

Reset Defaults

Node specific configuration¶

| Parameter | Value |

|---|---|

| Hostname | hn.examplesdomain.local |

| Additional aliases | login storage scratch nfs gw |

Storage

| Mount Point | Device | File System Type | FS Size | Recommended |

|---|---|---|---|---|

| /boot | sdb | xfs | 1 GiB | xfs, 1024 MiB |

| swap | sdb | swap | 4 GiB | Or 0.5 x RAM, if RAM > 8 GiB |

| / | sdb | xfs | 35 GiB + Free | xfs, remaining available space on smaller drive |

| /data | sda | xfs | 50 GiB + Free | xfs, all the available space on the larger drive |

Networking

Internet Interface

| Parameter | Value |

|---|---|

| Interface name | ens3 |

| IP method | auto |

| IP Address | 172.21.0.199 |

| DNS Servers | 8.8.8.8, 1.1.1.1 |

Please write down/remember the IP Address of the Internet Interface, as we need to SSH to this IP address from outside the host.

Private Interface

| Parameter | Value |

|---|---|

| Network Interface | ens4 |

| IP method | manual |

| IP Address | 10.0.0.100 |

| Subnet Mask or CIDR | 255.255.255.0 |

| Gateway | 10.0.0.100 |

| DNS Servers | 8.8.8.8, 1.1.1.1 |

| Parameter | Value |

|---|---|

| Hostname | wn01.examplesdomain.local |

Storage

| Mount Point | Device | File System Type | FS Size | Recommended |

|---|---|---|---|---|

| /boot | sda | xfs | 1 GiB | xfs, 1024 MiB |

| swap | sda | swap | 4 GiB | 64 GiB MAX on WNs. (On VMs, 4 GiB is fine) |

| / | sda | xfs | 35 GiB + Free | xfs, remaining available space |

Networking

Internet Interface

Please note that no dedicated interface is assigned to Internet traffic. Internet traffic is routed through the private interface using the IP address of the head node as the gateway.

Private Interface

| Parameter | Value |

|---|---|

| Network Interface | ens3 |

| IP method | manual |

| IP Address | 10.0.0.1 |

| Subnet Mask or CIDR | 255.255.255.0 |

| Gateway | 10.0.0.100 |

| DNS Servers | 8.8.8.8, 1.1.1.1 |

| Parameter | Value |

|---|---|

| Hostname | wn02.examplesdomain.local |

Storage

| Mount Point | Device | File System Type | FS Size | Recommended |

|---|---|---|---|---|

| /boot | sda | xfs | 1 GiB | xfs, 1024 MiB |

| swap | sda | swap | 4 GiB | 64 GiB MAX on WNs. (On VMs, 4 GiB is fine) |

| / | sda | xfs | 35 GiB + Free | xfs, remaining available space |

Networking

Internet Interface

Please note that no dedicated interface is assigned to Internet traffic. Internet traffic is routed through the private interface using the IP address of the head node as the gateway.

Private Interface

| Parameter | Value |

|---|---|

| Network Interface | ens3 |

| IP method | manual |

| IP Address | 10.0.0.2 |

| Subnet Mask or CIDR | 255.255.255.0 |

| Gateway | 10.0.0.100 |

| DNS Servers | 8.8.8.8, 1.1.1.1 |

Technical documentation¶

The following video briefly explains the use of the technical documentation and overview of cluster services.

Video

Connecting to the jump node¶

Follow the instructions on the information page to connect to the jump node. The following video shows how to add an SSH profile to connect to the Jump node using the Tabby SSH client.

Video

Connecting to the head node and worker nodes¶

The standard procedure to log into a worker node is described as follows:

- Log in to the jump node.

- From the jump node, SSH to the hn:

- To log into the worker nodes, you have to log in to the jump node, log in to the head node (hn) and then log into the required worker node.

Optionally, see the following video to create SSH profiles using Tabby to connect directly to the hosts without manually jumping from one node to the other.

Video

Automating the installation, using shell scripts¶

The following video explains how shell scripting can automate the creation of a cluster. It is recommended that participants write their own shell scripts as soon as possible to streamline the installation of a functional HPC.

Video

Installation Procedure¶

Note

You should first complete the technical documentation section to reflect your configuration before proceeding.

Preamble¶

In this document, you will see grey code blocks, for instance:

-

Annotations are added in some code blocks.

-

Text after a hash (#) is considered a comment and can be omitted from the command.

- Text in bold indicates a part where special care should be taken or what one should look out for in the results.

- It is possible to script each one of these code blocks.

- Scripting these instructions takes time and effort. However, attempting to write scripts for this workshop is highly recommended.

- To illustrate the impact:

- I created a script (which took about an hour to make) that executes sections Four to Nine in a matter of seconds.

- Students usually take six to eight hours (from previous workshops and cluster competitions) to perform these sections manually.

- Unless stated otherwise, these commands (code blocks) must be executed as the root user.

- Usually, one should not be using the root user in this manner. Instead, one usually executes commands that require super-user privileges using the

sudocommand. - However, you can remain logged in as the root user to save time in this workshop.

- Usually, one should not be using the root user in this manner. Instead, one usually executes commands that require super-user privileges using the

Example Topology¶

There are various options and components to consider when designing a cluster. Your budget and design will determine the topology (the way nodes/networks are connected).

One design that is often followed to build a small cluster is indicated in the figure below. This is not the only or necessarily best solution, but it is chosen for its simplicity yet effectiveness.

Take some time to familiarise yourself with this design and use it to envision what the design physically looks like when configuring your nodes.

Note

In this figure, the values from the technical documentation section are used and should be changed before continuing.

Your device names will differ from ens3, ens4, enX0, etc. So, take care to change those too.

You may have to refresh this page to have modifications reflect in this diagram.

C4Container

title Basic Topology of the cluster

Person(p_user, "usr99", $sprite="person")

Component(int, "Internet", $sprite="cloud")

System_Boundary(net_priv, "Private Network") {

Component(switch, "Private Switch")

System(hn, "Head Node", "hn login storage scratch nfs gw", "Provides all the management functionality and NAT internet traffic to nodes")

System(wn01, "Worker Node 01", "wn01", "Worker node or also known as compute node")

System(wn02, "Worker Node 02", "wn02", "Worker node or also known as compute node")

}

Rel_R(p_user, int, "")

BiRel(int, hn, "172.21.0.199 (ens3)")

BiRel(hn, switch, "10.0.0.100 (ens4)")

BiRel(switch, wn01, "10.0.0.1 (ens3)")

BiRel(switch, wn02, "10.0.0.2 (ens3)")

UpdateLayoutConfig($c4ShapeInRow="2", $c4BoundaryInRow="1")

UpdateRelStyle(hn, int, $textColor="red", $lineColor="red", $offsetX="-50", $offsetY="20")

Install OS & Reboot (HN,SN & WNs)¶

Each node must be installed according to the design set out in the technical document. Networking can be configured during the installation process, and it is advised. It will save you time. It is recommended that all nodes be installed using a Kickstart file (out of this scope); this way, all the configurations are uniform.

Head Node:¶

- If the HN is used to export software and scratch... It could be helpful to add an extra HD.

- The HN can be installed and used in the same way as a Compute/Worker node and will most likely be the case in the competition to save money.

- If the HN is also going to execute jobs, torque-mom must also be installed – if using Torque.

Worker Nodes:¶

- If a package/library is installed on one node, install the same package(s) on all nodes!!!!

Video

Installing the head node¶

The following video explains how a machine can be reinstalled if needed. If you are installing a new machine (no previous OS was installed), you can skip the first two minutes of the video. The remainder of the video explains the installation of Rocky Linux 9.4 on the head node.

Installing the worker nodes¶

The following video was created to assist with installing the worker nodes in the cluster. The order in which the installation is performed does not matter, and it is possible to install all the nodes simultaneously if enough participants and bootup memory sticks (in the case of a physical installation) are available.

Reinstalling a node¶

The method of reinstalling a machine (any type of node) is discussed in the video under the Installing the head node section.

Follow all the instructions in the video if you reinstall a head node.

However, if you need to install a worker node, follow the instructions from the video up to 04:30. After that, stop this video and continue the instructions given in the Installing the worker nodes section from 02:37 onwards in the video.

Setup Network Interfaces & ping nodes¶

If the network interfaces have not been configured during installation, configure them now. Make sure that the HN can reach the internet and the public network. Make sure that each IP address of the nodes can be pinged from the HN, e.g.:

Private IPs:

A configuration tool such as nmtui can set an IP address or edit the NetworkManager files directly.

Example content of /etc/NetworkManager/system-connections/ens3.nmconnection on wn01 :

- Only set mtu > 1500 if your switch supports it and is configured. Do not set this (mtu=9000) on NICs connected to routers! Default value: mtu=1500

You'll notice a Maximum Transmission Unit (MTU) entry set equal to 9000. Setting this value to use jumbo frames is helpful if your switch supports it, but your switch may require extra configuration to enable this feature.

The default value is 1500. The line starting address1= specifies the IP address in CIDR notation, followed by the gateway for the network.

Note that the IP addresses and the gateway will differ depending on what the organisers specify.

Finally, notice that the method is set to manual.

The recommended value is to set the method to manual. You may have forgotten to set it as such during installation, but it is essential to set it correctly to avoid unexpected behaviour.

Your device names will also differ, so your config file, for instance, may be:

/etc/NetworkManager/system-connections/ens8.nmconnection

A counterpart (scriptable) command to set the IP Address for ens3.nmconnection on wn01 would be:

After modifying the NetworkManager configuration file(s), restart the network service:

Video

Configure Firewalld - Firewall with NAT enabled (HN only)¶

Firewalld is a firewall that is widely used on GNU Linux. In recent years, RedHat and SuSE Linux moved over to Firewalld from IPTables.

Firewalld should be installed by default, but we can ensure that it is the case:

Determine which device should be internal and which is external, according to the IP Addresses:

The abovementioned rules set up Firewalld, allowing NAT traffic through its public interface. The text in green needs to be noticed and may need to be modified. In this example, ens3 is the public interface (172.21.0.199) with Internet access through its gateway. The other mentioned interface (ens4) is the private network interface between the nodes, for example, the interface with a 10.0.0.xxx IP address.

After adding the above commands, or after modifying Firewalld rules, you have to restart the firewall service by executing:

To test that it works, log into one of the worker nodes and ping an external IP:

To set the default gateway (in this case for a worker node), we can specify the default routing interface in /etc/sysconfig/network :

Note

Whenever you are setting up a service that works through the network, and it seems that it is not working, temporarily stop firewalld on both machines and try again.

If it works, then add the correct entries to the firewall rules. Also, make sure to start firewalld again afterwards.

Another helpful file is /etc/services. It lists several standard services with the ports on which they run.

Video

Modify /etc/hosts to contain all node names and IP addresses (HN)¶

The /etc/hosts file contains the hostnames and local IP addresses of machines you want to refer to that do not use a Domain Name Server (DNS).

It is a good idea (if you don't have access to a DNS) to add the IP addresses and different names of the nodes into this file so the system can resolve them as needed.

The format of the file is simple. Keeping the local and localhost IP addresses in the file is vital.

Here is an example of a /etc/hosts file:

-

The IP address ( 10.0.0.100) is the IP address that will be returned if you, for instance, try:

-

The same goes for the other IP addresses in the file; they will be returned when resolving one of the aliases, such as: wn01 or wn02 or node01, etc.

Video

Create an SSH Key for root and copy it to WNs (HN)¶

Using SSH Keys to log onto nodes is very important in a cluster. This allows users to log in to nodes without using a password. It is essential in a cluster because when a job is started from the HN, the job will act as that user and log in to the remote node(s). If it needs a password to log in, the job will fail. This is especially true for MPI jobs (discussed later).

An SSH Public Key or Certificate can be shared, emailed or copied to others. However, the Private Key/Certificate should never be shared with other people. If a Private Key is shared/compromised, other people can use it to log into the system using your account without a password. If a Private Key is stolen or shared, that key must no longer be used and should be replaced immediately.

As root, execute the following:

If the previous failed, execute this code block

Modify the username (hpc) above to reflect your regular username (not root).

This example is tricky since the root user cannot SSH using a password (before this step is executed).

Warning

It is crucial that the commands are executed successfully before continuing, or you will have errors when executing later commands.

Video

Configure SSH Service and turn off root login using a password (HN WNs)¶

Root login from remote machines (Internet) is dangerous, so we disallow the root user to log in from remote locations (over any network interface) using a password.

However:

- The root user will still be able to log in at the physical machine using a password

- Users that have

sudoprivileges will still be able to become root - The

rootuser can only log in from remote locations using SSH certificates/keys

To-do: Modify /etc/ssh/sshd_config

Look for the following parameters and modify/add the following values where needed:

| Modify /etc/ssh/sshd_config | |

|---|---|

- Set this value to yes if you are struggling

- Speed up the logins from remote hosts

The lines three and four are the important ones; the others should already exist or are the defaults.

After modifying this file, you will have to restart the SSH service:

Video

Disable SELinux (HN & WNs)¶

SELinux is used to harden security on a GNU Linux system (RedHat family). It restricts users and services from accessing files, ports, connection types and devices without pre-approved permission. For instance, Apache is allowed to serve websites from a specific location. Then, if files are placed outside that location and Apache is configured to publish them, an SELinux violation will occur. It is restricting access to that location.

It is best practice to keep SELinux running on a production server, but for our purpose here, it is easier for you to disable SELinux than having to "debug" that, too.

Modify the /etc/selinux/config file and change the SELINUX line to reflect the following:

| Modify /etc/selinux/config | |

|---|---|

Warning

We need to change the abovementioned entry, not SELINUXTYPE=xxxxx

Invalidating this file will leave your system unable to boot when rebooted.

It is possible to recover from that, but it is pretty technical and will not be discussed here.

After modifying the SELINUX parameter, you must reboot the system for the changes to take effect.

However, if you are not willing/allowed to reboot the system, you can execute the command:

Video

Create user accounts, add to the admin (wheel) group and give a password (HN) - Optional¶

Adding a group that will become the cluster's software owner is helpful. When regular user accounts are created, those users can then just be added to the group, and they will have the required access to the software:

Note

Using the root administrator account only if it becomes essential is good practice.

A system group exists that already has some root privileges set to it.

The group wheel is configured in the sudoers file, which allows group members to execute commands as root.

To create a user that is a member of wheel, the following command can be executed:

The above command will create a user called your_username (change to the value you want) that belongs to the groups wheel and hpcusers.

The user's home directory (-m) will also be created.

To modify an existing user:

After typing the above command, the user is prompted to enter and confirm the password. Nothing is displayed on the screen while the user is typing the password.

Video

Setup sudo rights (HN)¶

sudo file permits specific users to execute commands requiring root privileges on the system.

The file that controls all the sudo system rights is: /etc/sudoers

After adding members to the wheel group, they will, by default, have access to execute commands as root because of the line that reads:

You may also opt to add a line in /etc/sudoers or simply remove the # in front of the existing line to allow sudo commands without prompting for your password.

The line should look as follows:

It is just mentioned here which file to modify in case you need to change it or if you want to allow specific users to execute some particular commands only. This file can also be copied to other hosts requiring the same permissions, which will be discussed later in a later section discussing file synchronisation.

Video

Each user logs in and Generate their SSH Keys (HN)¶

In a previous section, we discussed creating SSH Keys for the root user.

The procedure is similar for other users, except the users' home directories will be shared between the nodes, and thus, the generated keys don't need to be copied over to other nodes.

However, one needs to add the public key to an authorised file with a list of public keys that can be used to authenticate as the user.

All the system users should execute the following commands to allow them to connect password-less to the nodes:

| Create a file: /etc/profile.d/ssh_keys.sh | |

|---|---|

Tip

Adding the above code into a script executed on the Head Node when a user logs in will be helpful.

If you opt to add it into such a script, place the content in a script in something like /etc/profile.d/ssh_keys.sh and remember to make it accessible for all users:

Video

If generic passwords were used, each member must change their password (HN)¶

Note

This is optional if you created multiple user accounts for other members (e.g. member1, member2, etc.).

If you made use of a generic password or did not set the password for the other members of the team, you may want to do it now because the passwords will be synced to the rest of the nodes in the following steps.

Video

Sync the /etc/{passwd, hosts, group, shadow, sudoers} files to WNs (HN)¶

After ensuring that all the team members have set their passwords, are in the wheel group, and that the sudoers and hosts entries are correct, you should copy the configuration files to all the nodes.

Ensure all the nodes are powered on at this stage and their IP addresses are configured correctly.

The important files can be copied by executing the following command on the HN:

The abovementioned command should be manually executed whenever a user is added to the system, when a host/node is added, or when its IP address is changed.

Note

Some applications installed from RPM or through DNF also add users.

To be safe, synchronise the files again after installing an RPM on the HN.

To be safe on the nodes' side, first sync the files from the HN before installing an RPM.

Video

Perform a dnf update (HN, SN & WNs)¶

After installing the nodes and if they have internet connectivity, update all the machines:

Video

Create scratch, soft and home directories and setup NFS (HN or SN)¶

As mentioned, the /scratch, /soft, and /home directories must be shared over the network between all the nodes.

To achieve this, we need to export them using NFS.

The files and directories will be physically stored on your Storage/Head Node's hard drive, but users can access them on the nodes through the network.

Note

Before setting up NFS, I would recommend that the directories that need to be shared are created and that all reside in a logical path.

I usually make a /exports or /data directory.

Even though we are talking about a directory here, it could (and should) be a different disk or partition than the root (/) filesystem.

Warning

It is essential for NFSv4 and higher that a host/node has its domain specified and all the nodes/servers have the SAME domain name.

The domain name does not have to be an "internet-friendly" or routable domain name, so a domain like mydomain.local will suffice.

| Execute the following on the HN | |

|---|---|

Assumptions

- You have a Solid-State Disk (or faster, such as m.2, SAS, or NVMe) that will be used for the homes, scratch and software storage.

- SELinux is turned off on all the nodes and servers or correctly configured (out of scope for this document).

- All nodes and servers have their hostnames set with a domain; the domain name (examplesdomain.local) is the same on all nodes.

- You have mounted this disk on

/dataand added the mount point into your/etc/fstab, which is automatically mounted after the machine is restarted.

The NFS server configuration is done on the same storage, scratch, or head node(s) we configured above.

Also, edit /etc/exports to contain the following (Note: you have to change the IP addresses to reflect yours):

| /etc/exports | |

|---|---|

The async option is used to cache content in memory before writing it to disk. This allows the node to continue while the server writes the data in the background onto the hard drive.

The risk is that data corruption will occur if the server is restarted or network connectivity is lost.

We can make this trade-off now for better performance...if you want to risk it.

If no_root_squash is used, remote root users can change any file on that shared file system.

This is acceptable in our trusted environment but should be removed if you export to untrusted sources outside your cluster.

For the changes to be applied, the following services should be restarted:

| On the head node | |

|---|---|

Video

Mount NFS Exports in the correct paths and modify fstab (WNs)¶

After the NFS "server" has been configured, you can mount the exported filesystem on all WNs.

Note

We use alias hostnames (scratch and storage) below.

These aliases were set in the section to add the /etc/hosts entries, and for this workshop, they may all reflect the same node as wn01 or hn.

However, they will indicate dedicated hosts in a production environment.

Danger

Take extra caution when modifying the /etc/fstab file.

If you change one of the values incorrectly, your system will not be bootable.

When rebooting, you will end up in an emergency console.

A possible fix can be seen at:

How to fix boot failure due to incorrect fstab

However, nothing is guaranteed; you may even have to reinstall the system to save time.

I would, therefore, make an initial backup of the /etc/fstab first in case we need to revert:

| Create a backup, if none exists | |

|---|---|

Install nfs-utils and test the mounting operation:

If those commands were all successful, you should modify the node's /etc/fstab file by ADDING the following:

| Add the follow lines to the end of your /etc/fstab (on all nodes) | |

|---|---|

After modifying the /etc/fstab, you can execute the following command to ensure everything is mounted correctly.

- No errors should be displayed

You can also make use of the following options to improve performance...maybe read up on them:

rw,tcp,noatime,hard,intr,rsize=32768,wsize=32768,retry=60,timeo=60,acl

If you have difficulty mounting something, log into the server (scratch or storage in this case) where you are mounting from, look in /var/log/messages for messages about why something might fail, and see if the firewall isn't blocking you.

Video

Ensure password-less SSH works from Head Node to Worker nodes and back¶

After NFS is set up on all the nodes, all users (not just root) should be able to SSH to all the nodes without typing in a password.

Test this using one of the members' accounts (or hpc).

SSH to wn01, then exit and do the same for wn02...wn02.

Also, make sure to SSH from one of the nodes back to the HN.

Here is a script that might be of assistance:

Note

You will notice that you must type in "yes" the first time you connect to a host; it is essential to know this because if a user tries to run an MPI job and hasn't SSH'ed to that node name, the job will hang. Notice I said: "to that node's name"... it can also be any alias, such as wn01-ib, etc.

Video

Install Environment Modules (HN & WNs)¶

The Environment Modules package is beneficial for managing users' environments. It allows you to write a module file for multiple software versions and then lets the user choose which version (s)he would like to use.

For instance, you can install four different versions of GCC and then just use the one you require for a specific purpose.

It becomes beneficial when installing scientific software because a researcher usually uses a particular version for his research, while another researcher needs another version for their study.

In a later section, you will use a preinstalled environmental module file, but for now, we first have to install the package to use that functionality.

You can install Environment Modules by downloading the latest version from the official modules website or install the package through dnf.

I would recommend the dnf install method because you have to install this package on all the nodes, and the dnf package available online will suffice for this exercise.

Note

After installing, you may have to log in again to execute the module command.

The above install creates a few modules in /usr/share/Modules/modulefiles.

They can be helpful to look at.

To see the modules available, execute the following command:

To achieve this, we can create a file called

/etc/profile.d/zhpc.sh, which is loaded when a user logs in to set the MODULEPATH.We make the filename

zhpc.sh because the execution order in the /etc/profile.d/ directory is done alphabetically, and we need the /etc/profile.d/modules.sh to be executed before our script is loaded.

The following commands (here document) will create the files and make them executable:

Creating a generic module and copying it to all the nodes is recommended.

This module holds generic configurations in variables which nodes can use.

Here is what is suggested:

Create a file: /usr/share/Modules/modulefiles/hpc with the content:

Then add the following line in a file (on all nodes) called /etc/profile.d/zmodules_hpc.sh:

| /etc/profile.d/zmodules_hpc.sh | |

|---|---|

This will load the hpc module every time a user is logged in.

Note

The benefit of this module is that the environment will be set up so that modules put in /soft/modules will be available to be loaded by users.

An entry is made to add /soft/hpc as a location where scripts can be put that will be in the user's path.

The users will automatically be able to execute scripts in this path, which have their executable flag set using chmod 0755 /soft/hpc/xxxx.

Note that when a script is added in the /soft/hpc/ path, you only need to set the executable flags (chmod) once because /soft is exported to all nodes.

Warning

Remember to copy the /etc/profile.d/zmodules_hpc.sh, /etc/profile.d/zhpc.sh and the /usr/share/Modules/modulefiles/hpc files to all the machines, so you can create it on one node and scp it to the other nodes:

Video

Performance Tuning (HN & WNs)¶

Some performance tuning can be done within the GNU Linux environment itself.

Numerous optimisations will enhance your machines' performance in an HPC environment.

However, it is particular to the equipment that is used.

For this reason, we will only set up a few important ones, such as the CPU throttling by the kernel.

Change or add the following entries in /etc/security/limits.conf :

| /etc/security/limits.conf | |

|---|---|

These settings will only be applied after a system reboot (which will be done in the next section) and can then be viewed with the command:

The following script should be executed on all nodes to add a new performance-tuning module (CPU Throttling) to your GNU Linux environment:

Important BIOS settings¶

Some modifications should also be made to all the nodes' BIOS.

The most important (if they exist in your BIOS) settings are:

| Description | Value |

|---|---|

| Power | Configure to use Max power if there is such an option |

| P-State | Disabled - This is also enforced by the script executed above |

| C-State | Disabled |

| Turbo Mode | Enabled - Specific to Intel CPUs |

| Hyper-Threading | Disabled - Intel |

| O Non-Posted Prefetching | Disabled - Intel Haswell/Broadwell and onwards CPUs |

| CPU Frequency | Set to Max |

| Memory Speed | Set to Max |

| Memory channel mode | Set to independent |

| Node Interleaving | Disabled - We need to enable NUMA |

| Channel Interleaving | Enabled |

| Thermal Mode | Set to Performance mode |

| HPC Optimizations | Enabled - AMD Specific |

Also, see an example of HPC BIOS settings for more information.

Note

The BIOS settings are not available in Virtual Machines.

However, if you are working on Virtual Machines and reached this point, it may be an excellent time to make a Snapshot.

Video

Install the Intel OneAPI Compiler (HN)¶

This section will be added later (watch the video for the content).

Make the modules available (WNs)¶

Execute the following commands on all the worker nodes to have the modules available later. During the Intel Compiler installation procedure, you will execute a script that creates the module files. However, the absolute path will be read as /data/soft. The worker nodes don’t have the software in that path. Rather, they have the software mounted on /soft.

| On all the worker nodes | |

|---|---|